Impressions and Links

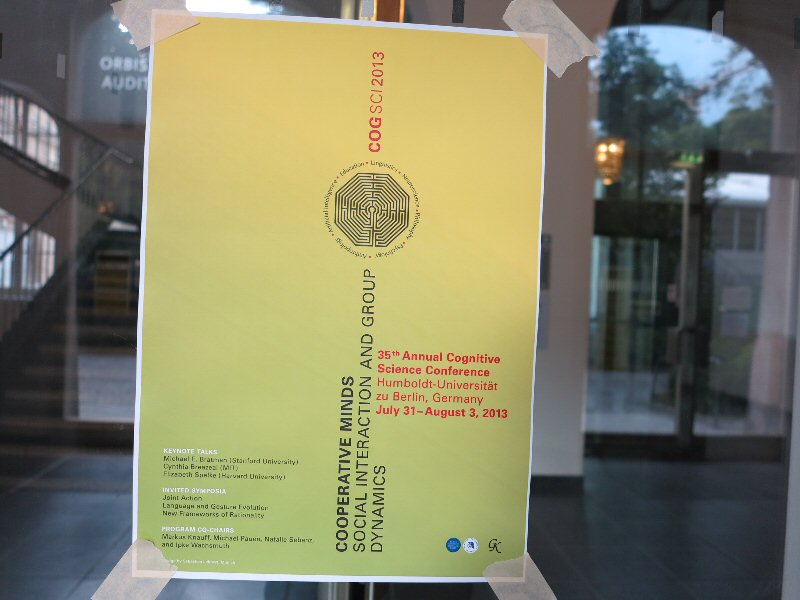

from CogSci 2013

(The 35th annual meeting of the Cognitive Science Society).

In Berlin, Germany. July 31 - Aug 3, 2013.

I had the great pleasure of taking part in CogSci 2013. Below you will find impressions from the conference, and links for further reading. Wonderful stuff! Certainly, I'm already looking forward to CogSci 2014 in Quebec!

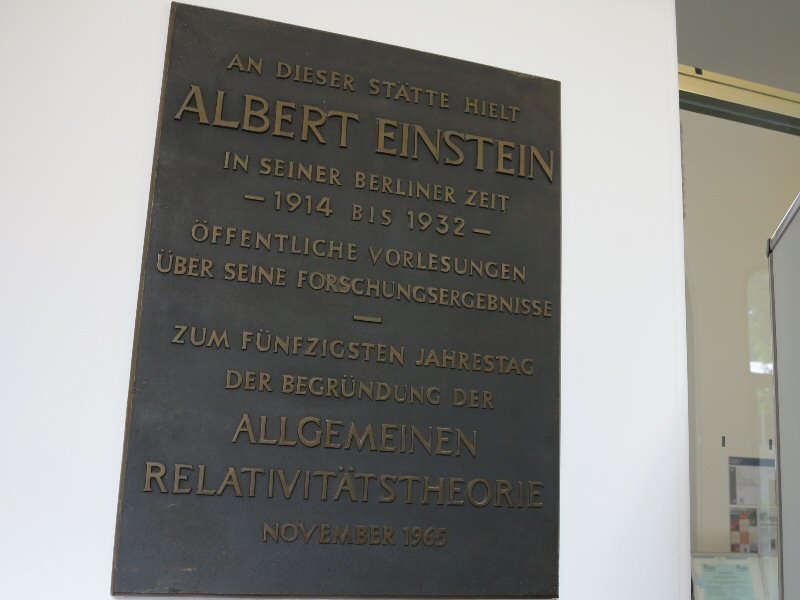

The CogSci 2013 conference was held at the Humboldt-Universität in Berlin, Germany.

Founded in 1810 as the University of Berlin, Humboldt Universitäts main building is located in the centre of Berlin at the boulevard Unter den Linden.

The first semester at the newly founded Berlin University occurred in 1810 with 256 students and 52 lecturers in faculties of law, medicine, theology and philosophy. Today, the Humboldt University has app. 37,000 students.

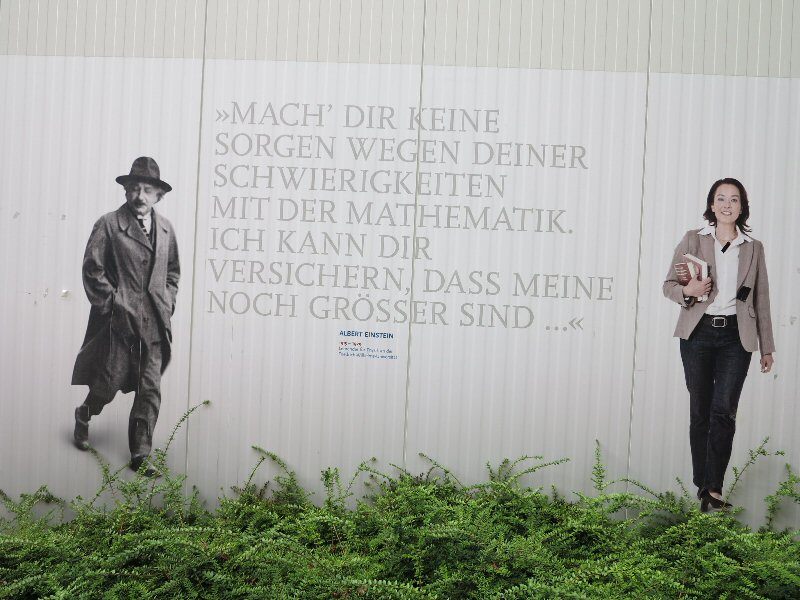

The university has been home to many of Germany's greatest thinkers of the past two centuries - Among them G.W.F. Hegel, Schopenhauer, Einstein, Planck, Mommsen, Schrödinger etc.

1. Introduction.

1.1. Venue of the Conference: Humboldt Universität Berlin.

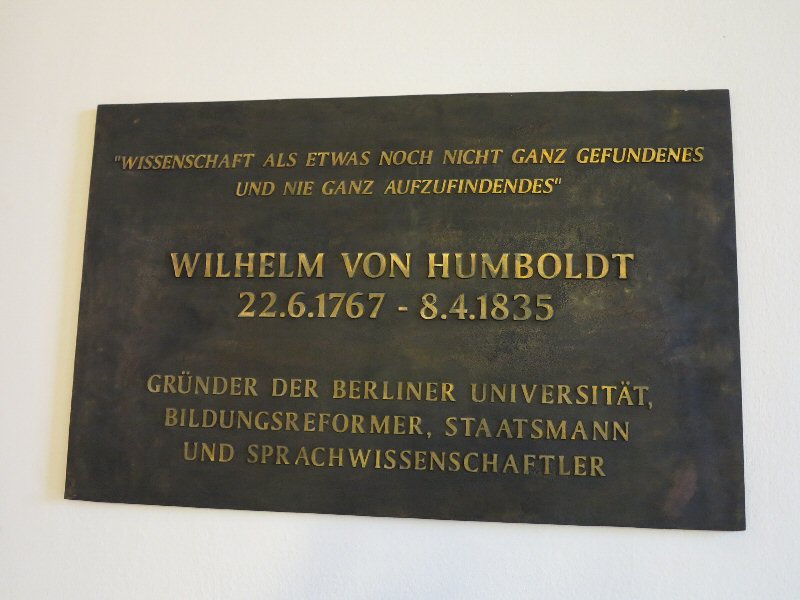

The venue of the conference was Humboldt Universität, Berlin.Named after its founder Wilhelm and his brother, geographer Alexander von Humboldt, Humboldt Universität is a university with a long, proud and tumultuous history. It was indeed here that the ''Humboldtian'' spirit of combining teaching and research was invented. All in all, a very interesting and fitting venue for the CogSci 2013 conference.

The university homepage describes (some of) Humboldts ideas:

Noch heute gilt die 1810 gegründete Berliner Universität als ''Mutter aller modernen Universitäten''. Dieses ist das Verdienst der Universitätskonzeption des Gelehrten und Staatsmannes Wilhelm von Humboldt.As Prussian Minister of Education Humboldt stated that:

Humboldt stellte sich eine ''Universitas litterarum'' vor, in der die Einheit von Lehre und Forschung verwirklicht und eine allseitige humanistische Bildung der Studierenden ermöglicht wird. Dieser Gedanke erwies sich als erfolgreich, verbreitete sich weltweit und liess in den folgenden anderthalb Jahrhunderten viele Universitäten gleichen Typs entstehen.

The ultimate task of our existence is to give the fullest possible content to the concept of humanity in our own person, through the impact of actions in our own lives.According to Wikipedia:

... Education, truth and virtue must be disseminated to such an extent that the concept of mankind takes on a great and dignified form in each individual...

... (This shall be achieved personally by each individual) who must absorb the great mass of material offered to him by the world around him and by his inner existence, using all the possibilities of his receptiveness; he must then reshape that material with all the energies of his own activity and appropriate it to himself so as to create an interaction between his own personality and nature in a most general, active and harmonious form...

Humboldt was also a philosopher and wrote On the Limits of State Action in 1791-1792, one of the boldest defences of the liberties of the Enlightenment (Society should be reformed using reason. Scientific thought, skepticism, and intellectual interchange should all be encouraged. Indeed, the scientific method should help us gain more knowledge).A man of many talents, Humboldt was also a linguist and translated greek poets Pindar and Aeschylus into German.

It influenced John Stuart Mill's essay On Liberty through which von Humboldt's ideas became known in the English-speaking world. Humboldt outlined an early version of what Mill would later call the ''harm principle'' (actions of individuals should only be limited to prevent harm to other individuals).

Surely, Pindar's Victory odes describes the prestige of victory in ancient hellenic festivals. Still, Humboldt must have felt that the Olympic spirit of Pindar's ode was very much in sync with his own aspirations...

If ever a man strives with all his soul's endeavour, sparing himself neither expense nor labour to attain true excellence, then must we give to those who have achieved the goal, a proud tribute of lordly praise, and shun all thoughts of envious jealousy.What a truly great place to have a conference!

1.2. CogSci 2013.

CogSci 2013's theme was ''Cooperative Minds: Social Interaction and Group Dynamics''. Reflecting a growing interest in moving from the study of individual cognition to the social realm.Still, over the four intense day of the conference, presentations dealt with everything from individual neurons to human group dynamics, which all-in-all made it a very exciting event to be part of.

1.3. Page Overview. Impressions and Links, Preparations and Background Material.

In sections (2 - 5) you will find my impressions and links from

(some of) the presentations at the conference

(Please notice: These notes don't do justice to the often brilliant presentations that initiated them! So, please read the original presentations to avoid any distortions ...).

The conclusion in section 6 is about seeing minds and cognition everywhere, even at train stations. With more to follow in the future.

(Please notice: These notes don't do justice to the often brilliant presentations that initiated them! So, please read the original presentations to avoid any distortions ...).

The conclusion in section 6 is about seeing minds and cognition everywhere, even at train stations. With more to follow in the future.

2. Impressions from July 31st 2013.

On the first day of the conference I attended the workshop ''A General Purpose Architecture for Building Spiking Neuron Models of Biological Cognition''.Chris Eliasmith and Terrence C. Stewart led the workshop, and made it all very memorable with many good insights and clever comments throughout the day.

The workshop ''A General Purpose Architecture for Building Spiking Neuron Models of Biological Cognition'' was held in the Karl Weierstrass auditorium.

2.1. How to Build a Brain. A Neural Architecture for Biological Cognition.

In the book ''How to Build a Brain. A Neural Architecture for Biological Cognition'' Chris Eliasmith writes:I suspect that when most of us want to know ''how the brain works'', we are somewhat disappointed with an answer that describes how individual neurons work.So, in the book he sets out to describe the basic principles, architecture, for building the ''wheels'' and ''gears'' needed to drive cognition.

Similar, an answer that picks out the parts of the brain that have increased activity while reading words. Or an answer that describes what chemicals are in abundance when someone is depressed,

is not what we have in mind. Certainly these observations are all part of the answer, but none of them traces the path from perception to action, detailing the many twists and turns along the way.

And this was obviously also the theme in this workshop.

2.2. Neural Simulations.

The brain model presented in the workshop (and in the book) is fully implemented in spiking neurons and able to perform (certain types of) object recognition, drawing, induction over structured representations, and reinforcement learning (some of the ''wheels'' and ''gears'' needed to drive cognition) - without changing the model.According to Chris Eliasmith, the model is constrained by ''data relating to neurotransmitter usage in various brain areas, detailed neuroanatomy, single neuron response curves, and so forth''.

And he believes that the model (presented here) is currently the largest functional simulation of a brain.

Obviously, there are other brain models out there (In the book, Chris Eliasmith writes):

There are several impressive examples of large-scale, single-cell neural simulations.At the workshop, Eliasmith also noted that modelling should be done at an ''appropriate'' level.

The best among these include Modha's Cognitive Computation project at Almeda Labs, Markrams Blue Brain project at EPFL and Izhikevich's large-scale cortical simulations.

...

Izhikevich's simulation is on the scale of the human cortex, running approximately 100 billion neurons. Modha's work is on the scale of a cat cortex (1 billion neurons), but is much faster than Izhikevich' simulations.

...

Critically, none of these past models have demonstrated clear perceptual, cognitive or motor function. Random connectivity does not allow any particular function to be performed, and focus on the cortical column makes it unclear what kind of inputs the model should have and how to interpret the output.

...

The model presented here, in contrast, has image sequences as input and motor behaviour as output.

It demonstrates recognition, classification, language-like induction, serial working memory, and other perceptual, cognitive and motor behaviours.

For this reason, I believe, that despite its modest scale (2.5 million neurons), it has something to offer to the current state-of-the-art.

In the Blue Brain project every neuron might be modelled with 100 equations or so, but there needs to be proof that this is necessary.

So far, it could be argued that simpler models capture just as many of the essential features (?).

It should also be noted that many models are actually pretty similar.

E.g. Bayesian models might seem very different from the Semantic Pointer Architecture presented in this workshop.

But, it should be possible to transform some of the bayesian models into an implementation in the Semantic Pointer Architecture.

I.e. basicly, any model that can be transformed into vector operations can be transformed into the Nengo neural simulator (used to build the Spaun brain model this workshop was all about).

2.3. Symbolism, Connectionism and Dynamicism - Three major approaches to theorizing about the nature of cognition.

Historically, there has been three major approaches to theorizing about the nature of cognition: Symbolism, Connectionism and Dynamicism.Where each of the approaches has given rise to specific models and architectures, and has relied heavily on a preferred metaphor for understanding the brain.

Chris Eliasmith gave us a short description of each approach:

Symbolicism: The classical approach (also known as ''Good Old Fashioned Artificial Intelligence'', GOFAI) relies heavily on the ''mind as computer'' methaphor.

Jerry Fodor, for one, has argued that the impressive theoretical power provided by this methaphor is good reason to suppose that cognitive systems have a symbolic ''language of thought'' that, like a computer program, expresses the rules that the system follows (Fodor, 1975).Connectionism. A second approach is connectionism (also known as the Parallel Distributed Processing, PDP).

Connectionism explain cognitive phenomena by constructing models that consists of large networks of nodes that are connected in various ways. Each node performs a simple input/output mapping, but when grouped together in large networks these nodes are interpreted as implementing rules, analyzing patterns, or performing any of several cognitive relevant behaviours.

Proponents of the connectionist approach presume that the functioning of the mind is like the functioning of the brain. Here, the brain is the mind.Dynamicism. The final approach in contemporary cognitive science is dynamicism, and is often allied with ''embedded' or ''embodied'' approaches to cognition.

The analysis of cognitive dynamics highlights the essential coupling of cognitive systems to their environment.

Dynamic constraints are clearly imposed by the environment on our behavior (e.g. we must see and avoid the cheetah before it eats us).Bayesian approach:

If our high-level cognitive behavior are built on low-level competencies. Then it should not be surprising that a concern for low-level dynamics has led several researchers to emphasize that real cognitive systems are embedded within environments that affect their cognitive dynamics.

Proponents of this view have convincingly argued that the nature of that environment can have significantly impact of what cognitive behaviors are realized.

(According to Eliasmith) It is tempting to identify a fourth major approach - what might be called the Bayesian approach. This work focuses on probabilistic inference to help in a rational analysis of human behavior. Unlike the other approaches, the resulting models are largely phenomenological (i.e. they capture phenomena well, but not the relevant mechanisms)...

There are no cognitive architectures that take optimal statistical inference as being the central kind of computation performed by cognitive systems. Nevertheless, a lot has been learned about the nature of human cognition using this kind of analysis. Still, the focus here will be on the three approaches that have driven mechanistic cognitive modelling to date.Each of the three approaches grew out of critical evaluations of its predecessors.

Still, each of the metaphors mentioned has offered its own insights regarding cognitive systems, that will be worth remembering as we build new models.

2.4. Bridging the gap. From Neurons to Cognition. The Semantic Pointer Hypothesis.

According to Richard Feynman:What I cannot create I do not understand.Chris Eliasmith restated this to: Creating a cognitive system would provide one of the most convincing demonstrations that we truly understand such a system.

And surely, as we are our brains, it is important to understand our brains.

After all, brains control not only the four Fs (feeding, fleeing, fighting and, yes, reproduction) - but everything.

In the quest to understand and build - we were then introduced to semantic pointers.

Where the purpose is to bridge the gap between the neural engineering framework and the domain of cognition (In short, building a model of what might be going on in the brain):

Higher level cognitive functions in biological systems are made possible by semantic pointers. Semantic pointers are neural representations that carry partial semantic content and are composable into the representational structures necessary to support complex cognition.The semantic information that is contained in a semantic pointer is usefully thought of as a compressed version of the information contained in a more complex representation.

Chris Eliasmith writes [p. 82 in ''How to Build a Brain'']:

Pointers are reminiscent of symbols. Symbols, after all, are supposed to gain their computational utility from the arbitrary relationship they hold with their contents.And ''pointers'' might indeed be central to the way we think. I.e. semantic processing in the brain might start a kind of simulation [p. 85]:

The semantic pointer hypothesis suggest that neural representations implicated in cognitive processing share central features with the traditional notion of a pointer...

In short, the hypothesis suggest that the brain manipulates compact, address-like representations to take advantage of the significant efficiency and flexibility afforded by such representations. Relatedly, such neural representations are able to act like symbols in the brain.

When people are asked to think and reason about objects (such as a watermelon), they do not merely activate words that are associated with watermelons, but seem to implicitly activate representations that are typical of watermelon backgrounds, bring up emotional associations with watermelons, and activate tactile, auditory, and visual representations of water melons.And, according to Chris Eliasmith, providing a neurally grounded account of the computational processes underwriting cognitive function (such as semantic processing) is precisely what the semantic pointer architecture is trying to do.

Indeed, a better computational account of how such semantic processing might be working within the brain, is high on the agenda in several labs around the world.

Chris Eliasmith quotes Larry Barselou for saying that [p. 87];

Perhaps the most pressing issue surrounding this area of work is the lack of well-specified computational accounts. Our understanding of simulators, simulations, situated conceptualizations and pattern completion inference would be much deeper, if computational accounts specified the underlying mechanisms. Increasingly, grounding such accounts in neural mechanisms is obviously important.(All of the) The precise details in these ideas might not have been completely clear to all of us at this stage of the workshop. But, certainly, semantic processing and simulations will be important in the coming phases of understanding cognition and building cognitive models.

2.5. The flow of Information.

After Representations and Semantic Pointers we went on to discuss Flow of Control.- The control of what course of action to pursue next.

- The control of which representations to employ.

- The control of how to manipulate current representations given the current context.

etc.

According to Chris Eliasmith [p. 164]:

For well over 20 years, the basal ganglia have been centrally implicated in the ability to choose between alternative courses of action.Understanding Flow of Information can be broken down into two parts:

Damage to the basal ganglia is known to occur in several diseases of motor control, including Parkinson's and Huntingson's diseases. Interestingly, these ''motor'' diseases have also been shown to result in significant cognitive defects.

Consequently, the basal ganglia is now understood as being responsible for ''action'' selection broadly construed.

- Determining what a (an appropriate) control signal is

(What should the next course of action be given the currently available information).

- And applying that control signal to affect the state of the system.

Chris Eliasmith continues [p. 171]:

Interestingly, all areas of the neocortex, with the exception of the primary visual and auditory cortices, project to the basal ganglia. Thus, controlling the flow of information between cortical areas is a natural role for the basal ganglia.There are some exceptions though, the thalamus helps in controlling major shifts in system functions:

Although there is evidence that cortex can perform ''default'' control without much basal ganglia influence, the basal ganglia make the control more flexible, fluid, and rapid.

...

In short, the basal ganglia control the exploitation of cortical resources by selecting appropriate motor and cognitive actions, based on representations available in cortex itself.

The thalamus is ideally structured to allow it to monitor a ''summary'' of the massive amounts of information moving through cortex and from basal ganglia to cortex. Not surprisingly, thalamus is known to play a role in major shifts in system functions (e.g. from wakefulness to sleep) and participates in controlling the general level of arousal of the system.(And) Attention is (obviously) another way for control of the flow of information.

Chris Eliasmith writes [p. 177]:

.. It consists of a hierarchy of visual areas (in the brain, from V1 to PIT), which receive a control signal from a part of the thalamus called the ventral pulvinar (Vp)... A variety of anatomical and physiological evidence suggests that pulvinar is responsible for providing a coarse, top-down attention control signal to the highest level of the visual hierarchy.In the basal ganglia model (presented to us at the workshop) only about 100 neurons needed to be added for each new rule.

Of those, about 50 needs to be added to the striatum, which contains about 55 million neurons.

So, in a model of this, about one million rules can be encoded, which seems sort of a reasonable amount of rules (Large enough to be interesting, given the possibility of combinations).

2.6. Spaun. Semantic Pointer Architecture Unified Network.

After the presentation of some of the central elements in the Semantic Pointer Architecture, it was time to tie it all together in the Spaun system (Semantic Pointer Architecture Unified Network). Where Spaun is a direct application of the SPA to eight different tasks, but in a limited domain.A number of Spaun videos can be seen here: Spaun Videos.

The Spaun model consists of three hierarchies, an action selection mechanism, and five subsystems. The hierarchies include a visual hierarchy that compresses image input into a semantic pointer, a motor hierarchy that de-references an output semantic pointer to drive a two degree-of-freedom arm, and a working memory that compresses input (in order) to store serial position information.Indeed, a very impressive number of brain centers are modelled in Spaun

The working memory also provides stable representations of intermediate task states, task subgoals, and context.

The action selection mechanism is based on the basal ganglia model described above [p. 256].

(Obviously, the whole brain is not modelled yet,

and equally obviously, it is still only a simplified model (good or bad) of what might be going on in a brain.

Afterall, building a brain is a difficult thing to do...).

The functional architecture of the Spaun model can be seen in figure 7.4 [p.253] of the book.

Table 7.2 in the book [p. 254] gives the intended mapping between Spauns simplified functional model and a real brains corresponding neuroanatomy (And, naturally, the functionality of the real brain centers were only dealt with very superficially in the workshop. Presenting the model was the main objective here...)

(Probably, the take-home message here is that building a (model of a) brain involves an enormous amount of components, and a lot of work...):

| Spaun Functional Architecture Element. |

Corresponding Brain Area. Acronym & Description. |

|---|---|

| Visual Input | V1 - Primary visual cortex. |

| V2 - Secondary visual cortex. | |

| V4 - Extrastriate visual cortex (Combines input from V2 to recognize simple shapes). |

|

| IT - Inferior temporal cortex (The highest level of the visual hierarchy, representing complex objects). |

|

| Information Encoding | AIT - Anterior inferior temporal cortex (Implicated in representing visual features for classification and conceptualization). |

| Transform Calculation | VLPFC - Ventrolateral prefrontal cortex (Rule learning for pattern matching). |

| Reward Evaluation | OFC - Orbitofrontal cortex (Representation of received reward). |

| Information Decoding | PFC - Prefrontal cortex (Executive functions). |

| Working Memory | PPC - Posterior parietal cortex (Temporary storage and manipulation of information). |

| DLPFC - Dorsolateral prefrontal cortex (Manipulation of higher level data related to cognitive control). |

|

| Action Selection | Str (D1) - Striatum. |

| Str (D2) - Striatum. | |

| Str - Striatum. | |

| STN - Subthalamic nucleus (Input to the ''hyper direct pathway'' of the basal ganglia). |

|

| VStr - Ventral striatum (Representation of expected reward to generate reward prediction error). |

|

| GPe - Globus pallidus externus (Projects to other parts of the basal ganglia to modulate their activity). |

|

| Gpi/SNr - Globus pallidus internus and substantia nigra pars reticulata. | |

| SNc/VTA - Substantia nigra pars compacta (Control learning in basal ganglia connections). |

|

| Routing | Thalamus - Coordinates/monitors interactions between cortical areas. |

| Motor Processing | PM - Premotor cortex (Planning and guiding of complex movement). |

| Motor Output | M1 - Primary motor cortex (Generates motor control signals) |

| SMA - Supplementary motor area (Complex movements). |

According to Chris Eliasmith [p.276]:

Perhaps the most interesting feature of Spaun is that it is united.Spaun is both physically and conceptually unified:

Thus the performance of each task is less important than the fact that all of the tasks were performed by the exact same model.

No parameters were changed nor parts of the model externally ''rewired'' as the tasks varied. The same model perform all of the tasks in any order.

This single model has neuron responses in visual areas that match known visual responses, as well as neuron responses and circuitry in basal ganglia that match known responses and anatomical properties of basal ganglia, as well as behaviorally accurate working memory limitations, as well as the ability to perform human-like induction, and so on [p.276].It is all quite impressive, actually.

2.7. Evaluating the Model.

Still, there are many unsolved problems in this land of modelling.In the (''How to Build a Brain'') book a couple of problems with many contemporary models are mentioned:

Jackendoff (2002) has identified his first challenge for cognitive modelling as the ''the massiveness of the binding problem''And, a good cognitive architecture must account for the functions of, and relationships between, working and long term memory.

(Versions of the problem can be found here).

...

It highlights that a good cognitive architecture needs to identify a binding mechanism that scales well [p. 300].

But:

The nature of long-term memory itself remains largely mysterious. Most researchers assume it has a nearly limitless capacity. Although explicit estimates of capacity range from 10^9 to 10^20 bits.And then the models must be robust:

So, although not limitless, then capacity is probably extremely large.

Significant amounts of work on long-term memory have focussed on the circumstances and mechanisms underlying consolidation.

Although various brain areas have been implicated in this process (including hippocampus, entorhinal cortex, and temporal cortices), there is little solid evidence of how specific cellular processes contribute to long term memory storage.

...

A good cognitive architecture must account for the functions of, and relationships between, working and long-term memory [p. 306].

There is little evidence that cortex is highly sensitive to the destruction of hundreds or even thousands of neurons. Noticeable deficits usually occur only when there are very large lesions, taking out several square centimeters of cortex (1 cm^2 is about 1 million neurons, and the average human adult has about 2500 cm^2 of cortex [p. 410]). If such considerations are not persuasive to proponents of these models, then the best way to demonstrate that the models are robust is to run them while randomly destroying parts of the network, and introducing reasonable levels of noise. In short, robustness needs to empirically shown [p. 345].The problem of inference should also be addressed by a good cognitive model.

For example, when I assume that an object has a backside even though I cannot see it, I am often said to be relying on mechanisms of perceptual inference [p.374].Current models do not have a unified theory of inference, but Eliasmith thinks SPA can help us understand inference by characterizing it in the context of a functional architecture.

SPA is a work in progress, and some aspects of cognition has not been touched upon yet.

For example, emotion and stimulus valuation are largely absent. These play a cricial role in guiding behavior and need to be integrated into the SPA [p. 380].Actually, late in the book, Eliasmith admits that the SPA

Is less of an architecture, and more of an architecture sketch combined with a protocol.Indeed, there are a lot of things that it will be very difficult to do in SPA, as it stands now (E.g. building a model for language etc. etc.).

Not all that surprising, as:

The (behavioral) sciences are far too young to have done anything but scratch the surface of possible cognitive theories [p. 384].But nevermind, this was truly a great workshop, providing inspiration for years to come!

- - - July 31st 2013 - - -

2.8. Wired articles about building brain models.

Wired magazine had interesting articles about building brain models in both June and August (2013).According to Wired, august 2013:

The thing to realize is that recreating human intelligence is impossible. We can't even grasp how the brain works.But according to the wired article, Modha is trying nonetheless:

''Clearly, we can't build a brain'', says Dharmendra Modha. ''We don't have the organic technology, nor do we have the knowledge''.

Known as a ''neuro-synaptic core'' their new chip includes hardware that mimic neurons and synapsesBack in June 2013 Wired had another article about brain simulations:

- the basic building blocks of our nervous system and the connections between them.

And in recreating the basics of the brain, Modha says, the chip eschews traditional methods of computer design.

''This tiny little neurosynaptic core really breaks from the von Neumann architecture'', Modha says.

''It integrates processor, memory and communication''.

The idea is that you could then piece multiple cores together - creating ever larger systems, spanning an ever larger number of fake neurons and synapses.

...

But he is quick to say that he can only hope that his cognitive computing work will have the lasting impact of the von Neumann architecture. And he is clear that he and his team haven't even begun to build real-world applications.

Sure, Functional magnetic resonance imaging might be as detailed as ever before.

And, sure, fMRI might the favourite tool of behavioural neuroscience, where scans can routinely track which parts of the brain are active while watching a ballgame or having an orgasm, albeit only by monitoring blood flow through the gray matter (the brain viewed as a radiator...).But to really know we must dig deeper.

At the simplest level, comparisons to computers are helpful:

The synapses are roughly equivalent to logic gates in a circuit, and axons are the wires. Combinations of input to neurons determines an output. Memories are stored by altering the wiring. (Animal) Behaviour is correlated with the patterns of firing. Etc.It gets more complicated though:

Yet, when scientists study these systems more closely, such reductionism looks nearly rudimentary.So, at its most fine grained, at the level of molecular biology, neuroscience attempts to describe and predict the effect of neurotransmitters one ion channel at a time.

...

There are dozens of different neurotransmitters (dopamine and serotonin, to name two) plus as many neuroreceptors to receive them. (And) there are more than 350 types of ion channels, the synaptic plumbing that determines whether a neuron will fire.

Again from wired, june 2013:

Markram thinks that the greatest potential achievement of his sim would be to determine the causes of approximately 600 known brain disorders. ''It is not about understanding one disease'', he says. ''It is about understanding a complex system that can wrong in 600 ways. It is about finding the weak points''.The wired article continues:

Rather than uncovering treatments for individual symptoms, he wants to induce diseases in silico by building explicitly damaged models, - and then find workarounds for the damage.

...

The power of Markrams approach is that the lesioning could be carried out endlessly in a supercomputer model, and studied in any scale, from molecules to the brain as a whole.

...

(We want to) see the flow of neurotransmitters and ions, but we could also experience the illusions (of certain brain disorders).

''You want to step inside the brain'', Markram says. He will achieve this by connecting his model brain to sensor-laden robotics and simultanously record what the robot is sensing and ''thinking'', as it explores the physical environment (correlating audiovisual signals with simulated brain activity as the machine learns about the world).

In hype driven context (such as his 2009 TED talk), Markram has hinted at the possibility that a sim embodied in a robot might become conscious.Read more on the Human Brain Project homepage.

...

When pressed, he shows a rare sense of modesty. ''A simulation is not the real thing'', he says. ''I mean, it is a set of mathematical equations that are being executed to recreate a particular phenomenon''.

Markrams job, simply put, is to get those equations right.

Humboldt University Hallways.

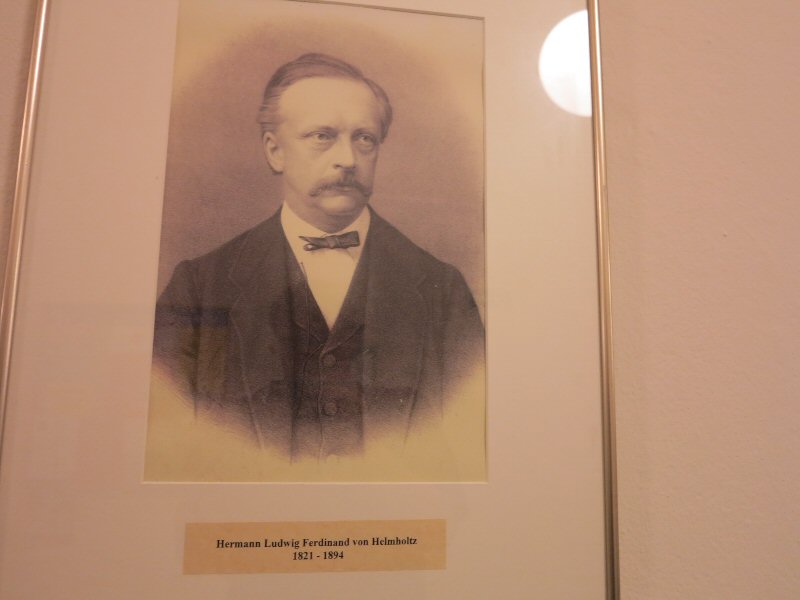

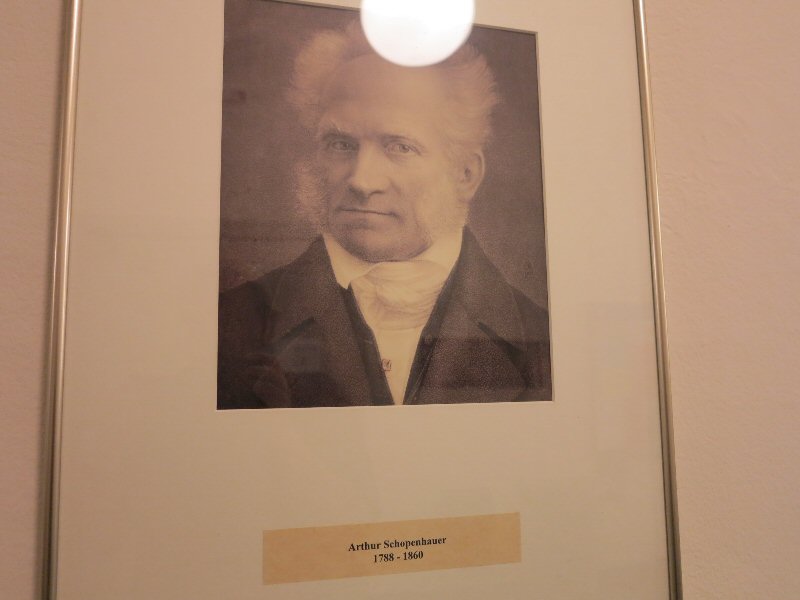

After the workshop I explored some of the Humboldt University hallways.Portraits on the walls reminds one that this was indeed once home to many of Germany's greatest thinkers.

Helmholtz accepted his final university position in 1871, as professor of physics at Humboldt University of Berlin

(Picture hanging in the hallway, Main building, Humboldt University).

Schopenhauer became a lecturer at the Humboldt University of Berlin in 1820

(Picture hanging in the hallway, Main building, Humboldt University).

3. Impressions from August 1st 2013.

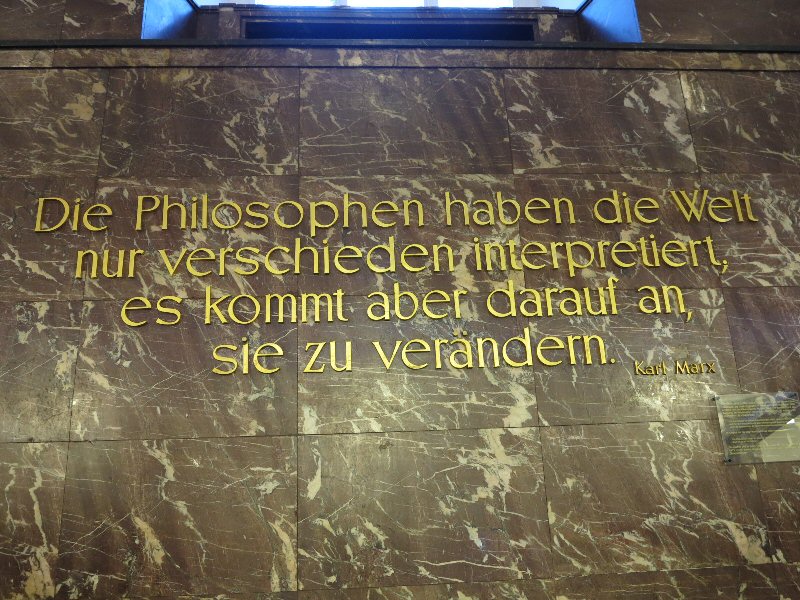

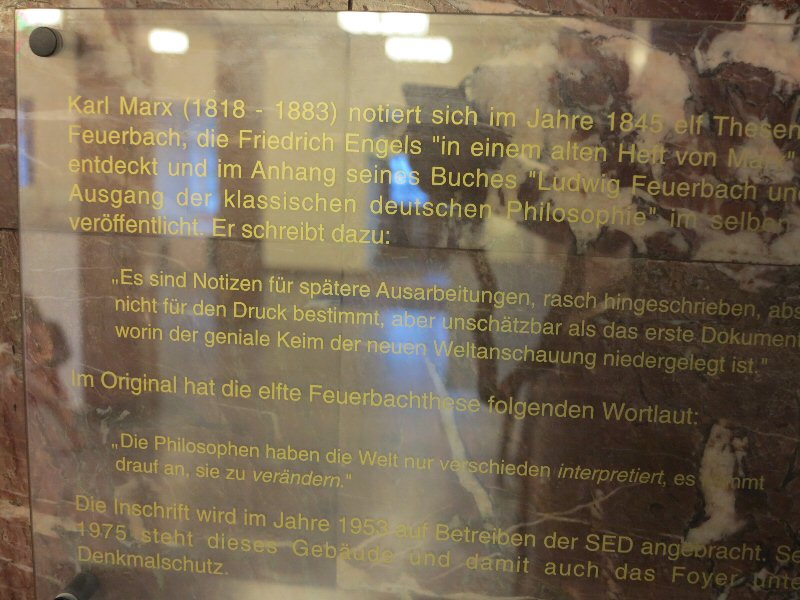

The next day started in the impressive Foyer of the Humboldt University.At the main entrance stairway I took a look at the preserved Karl Marx quotes...

Karl Marx quote put up by the SED in the Foyer (Main Entrance stairway), Main building,

Humboldt University of Berlin.

Sign just below the Karl Marx quote (bottom right in the picture above) explains the historical context behind the quote

(Foyer, Main building, Humboldt University).

3.1. Open EEG.

On my way to the John Duncan Lecture, I suddenly found myself deep in a very interesting talk about build it yourself - EEG equipment (See the Open EEG project). A great opportunity to talk a little about my NeuroSky experiments. But also to learn a little about what others are up to in this world of build it yourself - EEG experiments.A great way to start a day!

3.2. A Core Brain System in Assembly of Cognitive Episodes.

Next up was John Duncan with a talk about ''A Core Brain System in Assembly of Cognitive Episodes''.In his book, ''How Intelligence Happens'' (See my review: Here), John Duncan writes:

''Anything we do involves a complex, coordinated structure of thought and action. For any task, this structure can be better or worse - the structure is optimized as we settle into the task.'' [p. 93]The russian neuropsychologist Alexander Luria and others have suggested that the brains frontal lobes are essential in this process (finding the best coordinated structure of thought and action). And, certainly, litterature on frontal lobe patients show deficits in all sorts of cognitive tests: Perceptions, learning mazes, response etc. etc.

In the talk, John Duncan elaborated a little on this, and gave us some examples of patients with frontal lobe damage, who are lost when asked to break a complex task into smaller episodes. Apparently, the lateral frontal cortex holds a dense, distributed model of what is being attended to (stimulus, working memory, motivation). And, damage here gives a number of problems - e.g. Goal Neglect (was mentioned in the talk, as one of the more interesting problems), the patient knows what do, but doesn't do it.

In the book, John Duncan writes:

''Three regions seem to form a brain circuit that comes online for almost any kind of demanding cognitive activity'' [p. 104].

This general circuit, or the multiple demand circuit, is described, in the book, as a band of activity on the lateral frontal surface, towards the rear of the frontal lobe, close to the premotor cortex. Plus a band of activity on the medial frontal surface. Plus activity in a region across the middle of the parietal lobe.

For any given demand, this general circuit will be joined with brain areas specific for the particular task.

Indeed, it takes constant effort and vigilance to keep thought and behaviour on track.

And, the general circuit, or the multiple demand circuit, is essential in keeping us on track. So, for demanding tasks it seems reasonable to assume:

The bigger the damaged area (frontal lobes), the worse the impairment.'' and ''Damage within the multiple demand regions is more important than damage outside them'' [p. 110].Read more in my review of John Duncans book ''How Intelligence Happens''.

Statues of Max Planck and Theodor Mommsen near the main entrance.

In the coffee break, after the John Duncan talk, I took a look at the statues near the university main entrance.

Max Planck was a University of Berlin professor from 1892 to 1926,

where he was succeeded by Erwin Schrödinger.

Theodor Mommsen is regarded as one of the greatest classicists of the 19th century.

His work regarding Roman history is still of fundamental importance for contemporary research.

He received the Nobel Prize in Literature in 1902.

He became a professor of Roman History at the University of Berlin in 1861,

where he held lectures up to 1887.

3.3. Constraints on Bayesian Explanations.

In this session speakers were asked to comment on:- The unique contribution of the Bayesian perspective.

- Why do Bayesian explanations need constraints?

- How do you propose to constrain Bayesian models.

- What type of constraints do we need?

Carlos Zednik, University of Osnabrück, gave us a short introduction to Bayesian Rational Analysis (Anderson, 1990):

Where Bayesian Rational Analysis is a heuristic strategy for algorithm discovery.

Apparently, you just follow a simple ''cookbook'' (see Rational Analysis):

- Specify task

(Anderson: Specify precisely the goals of the cognitive system).

- Specify the structure of the environment

(Anderson: Develop a formal model of the environment to which the system is adapted).

- Specify computational cost and limitation

(Anderson: Make the minimal assumptions about computational limitations).

- Identify an optimal solution to the task

(Anderson: Derive the optimal behavioral function given the steps above).

- Compare this optimal solution behaviour

(Anderson: Examine the empirical literature to see whether the predictions of the behavioral function are confirmed).

- Introduce more constraints, refine

(Anderson: Repeat, iteratively refining the theory).

If the algorithm fit, then the task analysis (that guided us to the algorithm) is considered to be true as well...

But this might not be true! Other algorithms might have fitted as well.

Certainly, there is more to finding a good explanation than making a lookup in the AI litterature, and then find a good fit.

For the ''anti-bayesian'' bayesian explanations are under-constrained and therefore not useful.

Whereas the ''pro-bayesian'' sees bayesian explanations as useful even if they are under-constrained.

Matteo Colombo (Tilburg center for Logic and Philosophy of Science) continued with thoughts about the Bayesian approach to understanding cognition.

Here the Bayesian approach was seen as a unifying mathematical language for framing cognition:

The Bayesian approach promises to afford an unprecedented explanatorily unification to cognitive and brain sciences. So, these are exciting time for those of us interested in scientific explanation, the foundations of cognition and the prospects of a unified science of how the brain works.If we asssume an isomorphism between our behavioural models and the underlying neural model, it follows that Bayesian unified models can place fruitful constraints on the underlying neural models.

But. so far, it is not clear, if we have actually seen examples of such constraints with explanatory power on the neural level.

Which left us pretty much where we started (''Constraints on Bayesian Explanation'' - Johan Kwisthout, Matteo Colombo, Carlos Zednik and David P. Reichert):

Yes, the critics may be right that cognitive theories are explanatorily useful only if properly constrained (and proponents are wrong in their denial of this).David P. Reichert (Brown University) followed with comments about ''What are Bayesian models all about?'':

...

A crucial feature of adequate explanations in the cognitive sciences is that they reveal aspects of the causal structure of the mechanism that produces the phenomenon to be explained. The kind of unification afforded by the Bayesian framework to cognitive science does not necessarily reveal the causal structure of a mechanism.

- The world or the mind?

- Optimality and Rationality?

- Inference under uncertainty?

The speaker thought the answer was all of the above.

Still, when are talking about brain models, it is obviously possible ''to work at identifying challenges faced by computational neuroscience models that seek to directly map Bayesian computations onto neuronal implementations''.

Johan Kwisthout ended the session with a talk about ''Marr's three levels of analysis'':

- Computational level

(What does the system do. What problems does it solve or overcome).

- Algorithmic/representational level

(How does the system do what it does, specifically, what representations does it use).

- Physical level

(How is the system physically realized).

Surely, if we are interested in cognitive explanations, then we do want solutions to be implementable in all of Marr's 3 levels!

Dealing with all 3 levels will make our models more robust and reveal more relevant constraints.

- - - I I I - - -

University courtyard.

After the session ''Constraints on Bayesian Explanations, it was time for lunch.We found a free spot in the university courtyard, next to a memorial for the pre-war book burnings.

In Berlin, history is indeed ever present.

According to Wikipedia:

After 1933, like all German universities, Humboldt University was affected by the Nazi regime. It was from the university's library that some 20,000 books by ''degenerates'' and opponents of the regime were taken to be burned on May 10 of that year in the Opernplatz (now the Bebelplatz) for a demonstration protected by the SA.See the Wiki article ''Erinnerung an die bücherverbrennung'' for more.

3.4. Concepts and Categories.

Tom Gijssels talked about ''Space and Time in the Parietal Cortex: fMRI Evidence for a Neural Asymmetry''.It was all about space and time in the parietal cortex.

Here we investigated whether space and time activate the same neural structures in the inferior parietal cortex (IPC) and whether the activation is symmetric or asymmetric across domains.In an experiment people looked at a line. The longer the line was, the longer subjects thought an experiment took...

The behavioral results replicated earlier observations of a space-time asymmetry: Temporal judgments were more strongly influenced by irrelevant spatial information than vice versa.fMRI shows areas of overlap for space and time. And, apparently, the shared region of IPC was activated more strongly during temporal judgments than during spatial judgments.

The speaker concluded:

They suggest that space and time are represented by distinct but closely interacting neural structures, either in the IPC, or in the form of a broadly distributed network.Felix Hill, Cambridge University, talked about the abstract and the concrete.

A large body of empirical evidence indicates important cognitive differences between abstract concepts, such as guilt or obesity, and concrete concepts, such as chocolate or cheeseburger. It has been shown that concrete concepts are more easily learned and remembered than abstract concepts, and that language referring to concrete concepts is more easily processed.Still, there is no standard account of how the distinction between abstract and concrete should be drawn.

Indeed, the abstract, concrete might form a continuum...

In a study the speaker finds that increasing conceptual concreteness gives people ''Fewer, but stronger associates''.

i.e. abstract concepts has more associates.

In the mind, we assume that abstract words are organized according to associations.

and, concrete words are organized according to similarity (more so that abstract concepts).

According to the author: Ongoing work by Lawrence Barsalou might clarify this further.

I.e. he is currently doing work on concrete vs. abstract activation patterns in fMRI.

And, hopefully, this work will help clarify what is really going on!

3.5. Philosophy of Cognitive Science.

Joshua Mugg, Department of Philosophy, Toronto, talked about ''Simultaneous Contradictory Belief and the Two-System Hypothesis'':The two-system hypothesis states that there are two kinds of reasoning systems, the first of which is evolutionarily old, heuristically (or associatively) based, automatic, fast, and is a collection of independent systems. The second is evolutionarily new, perhaps peculiar to humans, is rule-based, controlled, slow, and is a single token system,It seems obvious that ''if you have held contradictionary views at the same time, you must have two separate reasoning system''? And it seems reasonable to suggest that there might be one fast system (associative system), and a slow system (rule based) working simultaneously in the brain.

Proving this might not be so easy though. But the speaker thought it should be possible to come up with experiments that could help give good evidence that humans indeed have two distinct reasoning systems (For more, see talk paper).

Kevin Reuter, Department of Philosophy, Ruhr University Bochum, talked about ''Inferring Sensory Experiences''.

Indeed, experience are not open to public confirmation. So, talking about experiences are pretty difficult.

Clearly, people think that things appear in a certain way because of our sensory system.

But what about ''experience itself'' then?

According to the speaker, ''experience'' is (a) appearances that are (b) subjective.

At lot of discussion followed in the session, and afterwards, clearly ''experience itself'' is not an easy topic.

Hegel bust, Humboldt Universität zu Berlin

(Hegel accepted the chair of philosophy at the University of Berlin in 1818).

3.6. Plenary talk. Cooperative Machines: Coordinating Minds and Bodies Between People and Social Robots.

The plenary talk was by Cynthia Breazeal, known for her work in robotics, and recognized as a pioneer of Social Robotics and Human Robot Interaction.She developed the robot Kismet for looking into expressive social exchange between humans and humanoid robots.

Her talk started with an introduction to the Leonardo Robot.

According to Wikipedia:

The goal of creating Leonardo was to make a social robot. Its motors, sensors, and cameras allow it to mimic human expression, interact with limited objects, and track objects. This helps humans react to the robot in a more familiar way. Through this reaction, humans can engage the robot in more naturally social ways. Leonardo’s programming blends with psychological theory so that he learns more naturally, interacts more naturally, and collaborates more naturally with humans.Leonardo can grasp ''good'' things, and show aversion for ''bad'' things.

Next up was the robot Maddox that can work with humans to find items

(E.g. see: Training a Robot via Human Feedback: A Case Study).

Here, ''trust'' is key, and the focus of much of the remaining part of the talk.

Trust is at the root of any good cooperation. And when it comes to human-robot relations trust is obviously also very important. I.e. It is important that humans trust the robots.

Accordingly, Cynthia Breazeal then talked about some of the signals her team want the robots to understand (when a human displays them). And perhaps, more interestingly, signals she wants to build into the robots, in order to make them appear more trustworthy.

What are the cues for trust?

- Facial expressions can make a robot/human appear more trustworthy.

And this method is a good way to build swift trust/spontaneous trust.

- Crossed arms can be used to send ''less trust'' cues.

Obviously, it is huge task to make robots display and understand such signals. But according to Cynthia Breazeal, essential if we are ever going to have robots work alongside humans in real world scenarios.

Interesting videos demonstrated that it is indeed all well under way.

Certainly, a great talk to end the day with!

4. Impressions from August 2nd 2013.

4.1. Plenary talk: Shared Agency.

The day started with Michael E. Bratman's talk about ''Shared Agency''.Shared intentional activity could be something like walking together, dancing, building a house etc.

In order to understand basic forms of sociality, Bratman proposes that planning concepts might offer some insights.

Once certain planning structures are learned by the individual, we can expect them to play central roles in our sociality.

Partial plans get filled in with more detail as they play out.

It follows that intentions are plan states.

In this light, shared intention becomes coordination of action and planning in pursuit of a certain goal.

According to Bratman, it is reasonable to speculate that individual planning can be used (through shared agency) to arrive at collective skills.

During Q & A, one member of the audience wanted to hear more about shared agency in the infant and parent case.

An awesome question, according to Bratman, but he wouldn't claim to be an expert on how these skills comes about, and how they might be put together.

Another member of the audience wanted to know more about two robots building a house.

In the scenario, the robot assigned to painting, might not be so good at working together with other robots.

Rather logically, Bratman thought this was a robot design issue. Where better general robot design should improve the situation...

More Humboldt University Hallways:

After the talk it was again time to explore some of the Humboldt University hallways...4.2. Minimal Nativism. How Does Cognitive Development Get Off The Ground?

Symposium. August 2nd. Minimal Nativism: How does cognitive development get off the ground.Tomer Ulman, Josh Tenenbaum, Noah Goodman, Shimon Ullman and Elizabeth Spelke.

When constructing a mind, what are the basic materials, structures and blueprints a young child has to work with? Are most of the structures already in place, with children merely working to embellish them? Such a view might describe a strong innate core hypothesis (Spelke et al., 1994).a) Shimon Ulman started the symbosia with the talk ''From simple innate biasis to more complex concepts''.

...

Or does the child begin with more of an empty plain, and an ability to construct whatever is necessary out of whatever materials are at hand at the time? Such a view might be more along the lines of classic empiricism (Quine, 1964).

If something is computationally difficult, but infants do it easily, it suggests that these skills might be based on innate abilities...

Finding hands in images is a difficult problem in computer vision.

Yet, detecting hands and sensing gaze direction, and using them to make inferences and predictions, are natural for humans.

Interestingly, there might however be a way to explain how humans could learn such a complex task, as finding hands in a scene.

I.e. one could start with movable events - what causes things to come and go?

If available, gaze will probably provide additional information on what is going on in a scene.

(And) following gaze is something 3-6 month olds can do. So, when you have a move event - look at the gaze - it is a good guess that the gaze is on the move event.

Domain-specific ''proto concepts'' can guide the system to acquire meaningful concepts, which are significant to the observer, but statistically inconspicuous in the sensory input. Such proto-concepts may exist in other domains, forming a bridge between the notion of innate conceptual knowledge and that of learning mostly from sensory experience.b) Noah Goodman continued with a talk about ''Minimal nativism and the language of thought'':

We know that universal formal languages can be built with a very small number of primitive operations. Informally, complex concepts might be build in similar way?

In the talk it was argued that:

A universal language of thought together with a powerful learning mechanism is able to construct many of the needed concepts very quickly.If we look at ''Concepts as function definitions in a programming language (Lisp/Prolog)''.

then ''Adding a new concept can be seen as (Bayesian Induction) that extend the concept library''.

Take as innate:

- Representation capacity.

- Inductive learning mechanism.

And then move on to have:

- Broadly useful concepts can be learned by an ideal learner.

c) Next up was Tomer Ullman with a talk about ''Trajectory for learning intuitive physics and psychology.

- a case study in the origins of physical and psychological knowledge''.

Cognition can be viewed as a program, albeit an incredibly complex one. Cognitive development then is the process by which the mind moves from one program to another (using induction, synthesis).If we start with the knowledge that:

- Objects move under constraints of potentials/forces.

- Agents move according to utility and cost.

then we can go from a simple world of:

- Some Spelke objects and ''forces''.

- And a unique class of ''force generators''.

to something more complex with novel global forces.

Eventually we might even end up with ''force generators'' that have goals.

d) Elizabeth Spelke talked about ''Core Knowledge and Learning''.

The talk was another take on (Here: Children learning arithmetic):

Building new systems of concepts by productively combining concepts from the core system.With some interesting comments about how language might help in the production of new concepts (Under the following headlines):

- Language serve as a filter on what concepts are useful to deal with.

- Combinatorial rules of language provide the medium to build new concepts.

- - - I I I - - -

4.3. Concept Acquisition.

Jackson Tolins talked about ''Exploring the Role of Verbal Category Labels in Flexible Cognition''.Turns out that labeled categories are learned faster, and are subsequently more robust.

Indeed, it seems that verbal labels influence category learning, improving both speed of learning and strength of representation.

Haline E. Schendan talked about ''Mental Simulation for Grounding Object Cognition''.

The main proposed mechanism for how memory is grounded is mental simulation.The talk (and paper)

...

seeing an object or reading its name (e.g., “dog”) re-enacts associated features that were stored during earlier learning experiences (e.g. its shape, color, motion, actions with it), thereby constructing cognition, memory, and meaning.

went through evidence from cognitive neuroscience of mental imagery, object cognition, and memory that supports a multi-state interactive (MUSI) account of automatic and strategic mental simulation mechanisms that can ground memory, including the meaning, of objects in modal processing of visual features.Super interesting stuff, which (btw) gave me associations to the Situated Conceptualization talk at CogSci2012 (that also dealt with Mental Simulation, Concepts and Cognition).

- - - I I I - - -

4.4. Cognitive Modelling.

Trevor Bekolay talked about ''Simultaneous unsupervised and supervised learning of cognitive functions in biologically plausible spiking neural networks''.Within the framework of semantic pointer architecture (See workshop notes in section 2), a ''novel learning rule for learning transformations of sophisticated neural representations in a biologically plausible manner'' was presented.

Apparently, the new learning rule was ''able to learn transformations, as well as the supervised rule''.

Indeed, semantic pointer architecture is supposed to be a biologically realistic framework, so, sure, it should be able to learn, somehow...

Jelmer P. Borst tallked about ''Discovering Processing Stages by combining EEG with Hidden Markov Models''.

It is all about identifying processing stages in human information processing:

One of the main goals of cognitive science is to understand how humans perform tasks. To this end, scientists have long tried to identify different processing stages in human information processing.An experiment is set up:

...

By subtracting the RTs of two tasks that were hypothesized to share all but one processing stage, the duration of that stage could be calculated. A strong – and often problematic – assumption of Donders’ subtractive method is the idea that it is possible to add an entire stage without changing the duration of other stages.

During the study phase of this task, subjects were asked to learn word pairs. In a subsequent test phase – during which EEG data were collected – subjects were again presented with word pairs, which could be the same pairs as they learned previously (targets), rearranged pairs (re-paired foils), or pairs consisting of novel words (new foils). Subjects had to decide whether they had seen the pair during the study phase or not. Successful discrimination required remembering not only that the words were studied (item information), but also how the words were paired during study (associative information).A Hidden Markov Model were used to analyze EEG data. The HMMs will help us determine the number of stages there is evidence in the data. Given the number of stages, we can then speculate what is done in the stages:

The underlying reason for wanting to identify processing stages is explaining how tasks are performed.Falk Lieder (Translational Neuromodeling Unit, University of Zurich) talked about ''Learned helplessness and generalization''.

...

The first two stages seem to reflect visually perceiving the two words on the screen.

...

We hypothesize that stage 3 reflects item retrieval, to determine whether the presented words were learned during the study phase of the experiment.

...

Stage 4 is skipped in 50% of the trials. We tentatively hypothesize that it reflects working memory consolidation of the items that are retrieved from memory in stage 3. This is not a necessary process, which might explain why it is skipped in 50% of the trials.

As explained above, we assume that stage 5 reflects associative retrievals.

...

Stage 6 of the targets/re-paired foils and stage 4 of the new foils are the final stages in the task. We assume that they reflect response stages.

More confident responses are faster than not so confident responses.

Helplessness is a failure to avoid punishment or obtain rewards even though they are under the agent's control.See experiments by Seligman and Beagley (1975):

If an agent has experienced uncontrollable stress, it will be slower to escape a new unpleasant experiment (than a naive agent). A rat that experienced controllable stress will escape the fastest (that is quicker than the naive rat).

Rats that were "put through" and learned a prior jump-up escape did not become passive, but their yoked, inescapable partners did. Rats, as well as dogs, fail to escape shock as a function of prior inescapability, exhibiting learned helplessness.Indeed, If you think the world is uncontrollable, you will show signs of reduced reward seeking and failure to avoid bad situations...

Very interestingly, a rather simple model might account for what is going on:

Bayesian learning of action-outcome contingencies at three levels of abstraction is sufficient to account for the key features of learned helplessness, including escape deficits and impairment of appetitive learning after inescapable shocks.

- - - I I I - - -

Dorotheenstraße and Universitätsstraße:

4.5. Rumelhart Prize Lecture - It's all connected in the developmental process. The body, the statistics, visual object recognition and word learning.

Linda Smith was this years recipient of the Rumelhart Prize.Her research is about the developmental process, and in particular the cascading interactions of perception, action, attention and language as children between the ages of 1 and 3 acquire their first language.

Earlier, developmental psychologists would have argued that as the brain gets bigger and better, it instructs the body to do more complicated things.

Now, in her theory for development of human cognition and action, ''dynamic systems theory'', all the parts of a system work together to create some action, such as a baby successfully grasping a toy. The limbs, the muscles, and the baby's visual perception of a toy all unite to produce the reaching movement.

The talk started with video of the world from a toddler’s point of view

(i.e. video from cameras mounted on a headhelmets worn by 4 months and 13 months old).

Scary, scary stuff. If your body moves like a toddler, stabilized visual attention is difficult...

A good thing that adults see the world from a more stable postition!

Makes attention a lot easier!

Inded, given the unstability of the toddler world, it certainly makes sense (for the toddlers) to be sitting and holding objects in order to get a good look at these objects ...

Holding stabilizes the head, and stabilizes attention.

Indeed, attention is just not the same for toddlers and adults.

E.g. playing together, infants follow hands, while the parents follow gaze and hands.

Studying human cognitive development is obviously important in order to understand human cognition.

But learning more about how things are put together, obviously also opens up the possibility of replacing certain steps in the development (if these steps are not possible for some reason for one particular child).

Very interesting and important stuff!

- - - I I I - - -

5. Impressions from August 3rd 2013.

5.1. Plenary talk: Core Social Cognition.

Elizabeth Spelke delivered the keynote speech saturday morning (Aug 3rd).Spelke has had a lifelong interest in the cognitive aspects of child psychology.

Experiments has convinced her that babies have a set of highly sophisticated, innate mental skills.The talk started by some comments about the complexity of social cognition.

This provides an alternative to the hypothesis originated by William James that babies are born with no distinctive cognitive abilities, but acquire them all through education and experience (See more on Wiki).

Sure, everyone knows that mature social cognition is complicated. So, obviously, it would be nice if infant social cognition wasn't equally complicated. But, perhaps we shouldn't get our hopes up too high...

- 7 month infants are computing and taking into account other peoples perspectives.We were then introduced to the hypothesis that we might have two innate systems that help us deal with social cognition (See core systems).

- 3-6 month olds seems to understand the concept of helping.

- At 14 months children will actively try to help.

A system to deal with agents and a system to deal with social beings.

At 10 months the babies then start to combine these systems.

The core agent system:

Represents agents and their actions.There might also be a core system, for identifying and reasoning about potential social partners and social group members:

- Infants represent agents' actions as directed towards goals through means that are efficient.

- Infants expect agents to interact with other agents, both contingently and reciprocally.

- Although agents need not have faces with eyes when they do, even human newborns and newly hatched chicks use their gaze direction to interpret their actions, as do older infants.

In contrast, infants do not interpret the motions of inanimate objects as goal-directed.

Research in evolutionary psychology suggests that people are predisposed to form and attend to coalitions whose members show cooperation, reciprocity, and group cohesion.By 8 month babies expect members of a social group to behave like other members of the group.

An extensive literature in social psychology confirms this predisposition to categorize the self and others into groups. Any minimal grouping, based on race, ethnicity, nationality, religion, or arbitrary assignment, tends to produce a preference for the in-group, or us, over the out-group, or them. This preference is found in both adults and children alike, who show parallel biases toward and against individuals based on their race, gender, or ethnicity.

...

These findings suggest that the sound (language) of the native language provides powerful information for social group membership early in development.

That raise the possibility of a core system that serves to distinguish potential members of one’s own social group from members of other groups.

By 9 - 12 month, shared attention, pointing, giving and accepting objects are intrumental actions with social meaning.

And then at 9 month and afterwards - language sets in, which gives the possibility of combining elements from core systems to form even better social cognition (Helping us to understand cooperation and competition etc).

Very convincingly presented. And obviously a very important topic!

- - - I I I - - -

5.2. Structured Cognitive Representations and Complex Inference in Neural Systems.

Symposium. August 3rd. Structured Cognitive Representations and Complex Inference in Neural Systems.Samuel Gershman, Joshua Tenenbaum, Alexandre Pouget, Matthew Botvinick and Peter Dayan.

Samuel Gersman started with a talk about ''From Knowledge to Neurons'':

How can neurons express the structured knowledge representations central to intelligence? This problem has been attacked many times from various angles. I discuss the history of these attempts and situate our current understanding of the problem.Somewhat critical of The Brain Initiative and The Blue Brain projects Gershman started the talk with a Marr quote:

Trying to understand perception by studying only neurons is like trying to understand bird flight by studying only feathers: It just cannot be done. In order to understand bird flight, we have to understand aerodynamics; only then do the structure of feathers and the different shapes of birds' wings make sense.Dealing with issues such as the Hard Problem of Consciousness, we might not even know what we are looking for.

Sure, we might have very detailed images of the brain, but where does it stop?

At the level of atoms?

Obviously, there need to be principles behind the brain organization (if we are ever going to learn more about it).

Eventually, we might be able to deal with questions like: a) How to learn structured knowledge with neural circuits, b) How can we represent structured probability distributions with neural circuits. etc.

Gerschman ended the talk with some thoughts about neuronal models that supports probabilistic inference, as the way forward.

Still, it might not be that easy to make a map from computational models to actual neurons.Matthew Botvinicks talk was about the question: ''How do hierarchical representations of action or task structure initially arise''?

As, a given population of neurons might encode many domains at the same time.

(And) How their behaviour might be modulated by other areas are not properly understood.

etc.

Here, it was approached as a learning problem.

Where

useful component skills can be inferred from experience. Behavioral evidence suggests that such learning arises from a structural analysis of encountered problems, one that maximizes representational efficiency and, as a direct result, decomposes task into subtasks by ''carving'' them at their natural ''joints''.The key question here was, of course, how this analysis and optimization process might be implemented neurally.

Here, we were presented behavioural and fMRI data in support of a hypothesis that a hierarchical task structure might arise as a natural consequence of predictive representation.

Alexandre Pouget talked about ''modeling the neural basis of complex intractable inference''.

It is becoming increasingly clear that neural computation can be formalized as a form of probabilistic inference. Several hypotheses have emerged regarding the neural basis of these inferences, including one based on a type of code known as probabilistic population codes or PPCs.Very interestingly:

We have started to explore how neural circuits could implement a particular form of approximation, called variational Bayes, with PPCs.Finally, Peter Dayan gave some interesting thoughts about episodic and semantic structure.

In the talk it was all about ''What is in a Face'' (I.e. there is semantic structure, as to where eyes and nose should be in face, and episodes are within that structured world).

Indeed, a great symposium.

- - - I I I - - -

Albert Einstein came to Berlin in 1914 after being appointed director of the Kaiser Wilhelm Institute for Physics (1914 - 1932) and a professor at the Humboldt University of Berlin, with a special clause in his contract that freed him from most teaching obligations.

5.3. Long-term Memory.

Michael Mozer, University of Colorado, talked about ''Maximizing Students Retention Via Spaced Review: Practical Guidance From Computational Models Of Memory''.Sadly:

During the school semester, students face an onslaught of new material. Students work hard to achieve initial mastery of the material, but soon their skill degrades or they forget.And

Although students and educators both appreciate that review can help stabilize learning, time constraints result in a trade off between acquiring new knowledge and preserving old knowledge. To use time efficiently, when should review take place?In experiments, ACT_R and MCM computer models have then acted as proxy for real students (and their memories).

Converging evidence from the two models suggests that an optimal review schedule obtains significant benefits over haphazard (suboptimal) review schedules.The authors end up identifying

Two scheduling heuristics that obtain near optimal review performance: (1) review the material from μ weeks back, and (2) review material whose predicted memory strength is closest to θ.Which sounded very useful.

- - - I I I - - -

5.4. Cognitive Development.

Lucas Butler, Max Planck Institute for Evolutionary Anthropology, Leipzig, talked about ''Preschoolers ability to navigate communicative interactions in guiding their inductive inferences''.We can learn from others in many ways:

- Testimony.

- Learning through generic terms.

- Observation and imitation.

- Intentional communication.

Evaluating whether information is generalizable, essential knowledge about a novel category is a critical component of conceptual development. In previous work 4-year-old children were able to use their understanding of whether information was explicitly communicated for their benefit to guide such reasoning, while 3-year-olds were not.I.e. 4 year olds appear to be conducting a moment by moment analysis to determine whether they are being taught something:

Four-year-olds were adept at navigating pedagogical interactions, judiciously identifying which specific actions in an ongoing interaction were meant as communicative demonstrations for their benefit, while 3- year-olds did not distinguish between the manners of demonstration even in a simpler context.I.e. ''this learning mechanism for facilitating children’s conceptual development is under construction during the preschool years''.

After the talk, I looked at my neighbor, (we were) both kind of bewildered about these amazing feats that children pick up (between 3 and 4).

Swapnaa Jayaraman, Department of Psychological and Brain Sciences, Indiana University,

talked about ''Developmental See-Saws: Ordered visual input in the first two years of life''.

In another of these amazing baby studies, we learn more about what cameras mounted on babies actually see...

The first two years of life are characterized by considerable change in all domains – perception, cognition, action, and social interactions. Here, we consider the statistical structure of visual input during these two years. Infants spanning the ages from 1 to 24 months wore body-mounted video cameras for 6 hours at home as they engaged in their daily activities.- Most frequent elements in front of infants are hands and heads

(Not counting backgrounds, like walls).

- A third of the time a head is in front of the infant

(Again, a third of the time the face is really close. Closer than one foot).

- Almost, nearly as often, hands dominate the visual field.

Our data strongly suggest that input is dynamic and ordered. Visual regularities in developmental time may be a rolling wave of "See-Saw" patterns.Which might be what is needed in order to bootstrap the system:

Developmental process consists not just in the sampling of information but also in the change in the very internal structure of the learner. Considerable evidence from a psycho-biological perspective shows that the ordering and timing of sensory information play a critical role in brain development.Explaining the finer details of ''Determining the architecture'' will probably not be all that easy, I imagine.

...

Between birth and 2 years, human infants travel through a set of highly distinct developmental environments determined first by their early immaturity and then by their growing emotional, motor, and cognitive competence. These ordered environments may help learning systems ''start small'', find the optimal path to the optimal solution, and determine the architecture of the system that does the learning.

Still, this thing, about video capturing what the babies actually see, that's an amazing experiment.

Michael Ramscar, Universität Tübingen, talked about ''The myth of cognitive decline''.

Dementia is real problem. But many other socalled examples of cognitive decline are predictable when the problems are examined further.

With age comes problems with remembering names.

Perfectly predictable, as we get exposed to more names...

Problems with executive functions are also predictable, as exposure to more possible actions makes action selection more difficult.

Across a range of psychometric tests, reaction times slow as adult age increases. These changes have been widely taken to show that cognitive-processing capacities decline across the lifespan. Contrary to this, we suggest that slower responses are not a sign of processing deficits, but instead reflect a growing search problem, which escalates as learning increases the amount of information in memory.Indeed:

Age increases the range of knowledge and skills individuals possess, which increases the overall amount of information processed in their cognitive systems. This extra processing has a cost.According to Michael Ramscar, what is actually going on might be ''wisdom'' rather than cognitive decline.

And, learning theory might explain why memories might become harder to individuate and more confusable with age:

Learning theory predicts that experience will increasingly make people insensitive to context, because ignoring less informative cues is integral to learning.A great talk about what lifelong learning actually means!

...

Because learning inevitably reduces sensitivity to everyday context, retirement is likely to make memories harder to individuate and more confusable, absent any change in cognitive processing, simply because it is likely to decrease contextual variety at exactly the time when, as a result of learning and experience, the organization of older adults memories needs it most.

- - - I I I - - -

6. Conclusion.

6.1. Future Minds on Berlin Hauptbahnhof Internet monitors.

After the conference I went home by train.

At the impressive Berlin Hauptbahnhof (Bahnhof.de) even the railway platform is an experience.

Here, TV monitors with internet access allows you to do some last minute internet-surfing (in case you have forgotten your smartphone...).

I looked up my own

Future Minds page.

Took a picture of it, and thought about the future for a little while, before boarding the ICE train.

Indeed, as Winston Chuchill said: ''The empires of the future are the empires of the mind''.

Future Minds page.

Took a picture of it, and thought about the future for a little while, before boarding the ICE train.

Indeed, as Winston Chuchill said: ''The empires of the future are the empires of the mind''.

6.2. CogSci 2014 in Quebec.

All brilliant stuff! Certainly, I'm already looking forward to CogSci 2014 in Quebec!

-Simon

Simon Laub

www.simonlaub.net